The VisionLib SDK 3.0 Release introduces major interface changes. Please make a backup of your project before upgrading to the new release and follow all sections below for migration guidance.

With 3.0, we implemented a paradigm shift from file-based model loading at runtime to model injection – i.e. streaming models into the backend directly from within Unity's scene hierarchy. While still supported, file-based model loading is now considered legacy.

Model injection uses the multi-model tracking setup centered around the TrackingAnchor. Since model injection is the new default, all our example scenes now use this setup, regardless of the number of objects being tracked.

The move to the multi-model tracking setup changes some behavior you might have become accustomed to. In scenes that used single-model tracking – e.g. the ModelTrackingSetup, SimpleModelTracking, and ImageInjection examples – the model remained static and only the camera moved during tracking. The camera's pose combined both SLAM and model tracking poses.

With multi-model tracking, the virtual camera's pose is determined by SLAM, while the model poses are determined by VisionLib's model tracking results. Without SLAM, the camera stays in the origin. With SLAM, the TrackingCamera keeps the camera's pose updated to the latest SLAM pose. The models, on the other hand, are moved by the RenderedObject component. This component positions the models such that their relative poses to the virtual camera match the relative poses of the real tracked objects to the real camera.

Scenes that previously already moved the camera and the models separately, such as the ARFoundation and HoloLens scenes, have not changed in this regard. They were only adapted to use the new TrackingAnchor component.

Affects: Unity model tracking scenes with tracking configurations that reference model files for tracking

Starting with VisionLib SDK 3.0, tracking models should no longer be specified in the tracking configuration file. Instead, models are added directly to the scene hierarchy from where new components forward them to VisionLib for tracking.

To migrate an existing scene to this new system, take the following steps:

GameObject (e.g. named ModelTrackingReference) under the SceneContent GameObject.ModelTrackingReference GameObject.TrackingAnchor component to the ModelTrackingReference GameObject.TrackingModel components from your scene and reparent GameObjects containing a TrackingModel under the ModelTrackingReference GameObject.ModelTrackingReference GameObject.TrackingAnchor component:Tracking Geometry/Add TrackingMesh component to all models button. This will add a TrackingMesh component to all child models under the ModelTrackingReference. The TrackingMesh components enable the use of the individual models for tracking in VisionLib.Slam Camera field in the TrackingAnchor to the Camera.main.Use this tracking anchor as content button. This will add a RenderedObject component to this GameObject. The RenderedObject component is used for applying the tracking result to the Transform of its GameObject. This way, augmentation and init pose guide will be moved and placed correctly in the world. For more details, you can also consult Using Different Augmentation and Init Pose Guide.VLTracking/Tracking Configuration:modelURI parameter.type of the tracker to multiModelTracker.VLInitCamera GameObject from your scene, if present. The InitCamera script is no longer used. Instead, the position of the TrackingAnchor relative to the MainCamera is used as the init pose by default. You have two options at this point. You may want to migrate an exisitng init pose from a .vl confiuration file or set an entirely new init pose yourself. For the former option, please follow the guide Discontinued Component: InitCamera below. To help with the latter option, you can obtain an initial estimation by pressing the Center Object in Slam Camera button on the TrackingAnchor. This will center your object in the view of the camera that is used to initialize the tracking. You can then fine tune the init pose from there.WorkSpaceManager from your scene, if present. The TrackingAnchor will take care of adding the WorkSpaces to VisionLib itself. To display the AutoInit learning progress, add an AutoInitProgressBar to your scene.Plane Constrained Mode component to the ModelTrackingReference GameObject.TrackingAnchor from the GameObject where the Plane Constrained Mode component was previously located.For further details on the TrackingAnchor and the scene setup, see Using Models from the Unity Hierarchy.

XRTrackerAffects: AR Foundation scenes that use VisionLib tracking

In VisionLib SDK 3.0, the generic TrackingAnchor replaces the XRTracker.

Init Camera in your XR Tracker (probably a game object called VLInitCamera), copy its transform into the ARCamera's transform.TrackingAnchor component somewhere.AR Camera is referenced as the SLAM Camera in the TrackingAnchor.XRTracker component.VLInitCamera GameObject. Instead, the position of the TrackingAnchor relative to the AR Camera is used as the init pose by default. To obtain an initial estimation for the init pose, press the Center Object in Slam Camera button on the TrackingAnchor. This will center your object in the view of the camera that is used to initialize the tracking.Affects: HoloLens scenes that use VisionLib model tracking

In VisionLib SDK 3.0, the TrackingAnchor and the HoloLensGlobalCoordinateSystem together replace the HoloLensTracker component.

TrackingAnchor component somewhere.HoloLens Camera is referenced as the SLAM Camera in the TrackingAnchor.HoloLensTracker component with a HoloLensGlobalCoordinateSystem component.HoloLensInitCamera GameObject. Instead, the position of the TrackingAnchor relative to its SLAM Camera is used as the init pose by default. To obtain an initial estimation for the init pose, press the Center Object in Slam Camera button on the TrackingAnchor. This will center your object in the view of the camera that is used to initialize the tracking.Starting with version 3.0, the init pose is no longer set using the InitCamera (or HoloLensInitCamera) component. Instead, the pose of a TrackingAnchor's GameObject is now treated as the init pose.

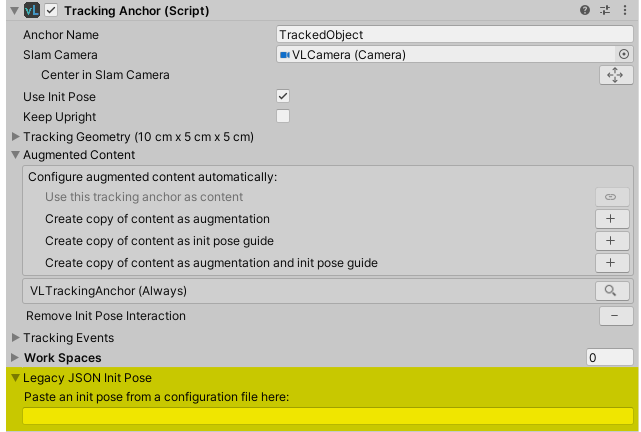

For cleaner data flow, TrackingAnchors no longer allow the use of init poses from tracking configuration files. Instead, the now legacy option to load init poses from config files has been moved to a separate component: InitPoseFromTrackingConfig. If you would like to migrate an existing project and continue to store your init pose in the tracking configuration file, first follow the migration guide "Use Models from Unity Scene for Tracking", then set up init pose loading as follows:

TrackingAnchor (named ModelTrackingReference in the example above)InitPoseFromTrackingConfig to this GameObjectSLAM Camera's transform to zero. (This camera is referenced in the TrackingAnchor.)To persistently apply the init pose from a config file, you must set the corresponding Unity World pose in the the TrackingAnchor's Transform while the editor is not in play mode and save the modified scene. You can then remove the now obsolete init pose parameter from your config file entirely.

There are two ways to apply the correct values to the TrackingAnchor's Transform:

InitPoseFromTrackingConfig (assuming you have already set this up as described above):Transform of the TrackingAnchor's GameObject.Transform values into the GameObject's Transform.InitPoseFromTrackingConfig component and delete the init pose from your Tracking Configuration file. Both are no longer required.Legacy JSON Init Pose:

InitPoseFromTrackingConfig component and delete the init pose from your Tracking Configuration file.In 3.0 the TrackingModel component has been removed. Please remove any references to this script from your scene. To use the model injection feature follow the Migration Guide - Switch to Using Models from The Scene Hierarchy for Tracking.

In 3.0, the WorkSpaceManager has been removed since the TrackingAnchor now handles the learning process itself. To display the AutoInit learning progress, add an AutoInitProgressBar to your scene.

This guide applies to scenes where a tracking target was previously constrained to a plane.

Plane Constrained Mode components must now be located on the same GameObject as the TrackingAnchor they constrain. In each affected scene, move the existing Plane Constrained Mode component accordingly. Then remove the auto-generated TrackingAnchor from the GameObject where the Plane Constrained Mode component was previously located.

The "keep upright" option on the TrackingAnchor holds the associated models upright with respect to the world up direction, regardless of camera movement. In image sequences with SLAM pose data recorded on iOS and Android with VisionLib versions prior to 3.0, the SLAM up vector is upside down w.r.t. Unity's world up direction. When using "keep upright" with these sequences, you must invert the World Up Vector parameter in all TrackingAnchors under Keep Upright/Advanced. Otherwise, the model will be held upside down. In the default case, this would mean changing the parameter from (0,1,0) to (0,-1,0).

In 3.0, we rotated the SLAM coordinate system on Android and iOS such that the SLAM up direction aligns with Unity's world up direction (see Release Notes 3.0). The workaround described above is therefore no longer required with image sequences newly recorded using the current VisionLib version.

We have changed the naming schemes for init data with this release. To read data from old projects, rename the files according to the tables in Release Notes 3.0.

If the model tracking gets lost and extendibleTracking is activated, VisionLib predicts the pose of the object in the world via the SLAM transform. In this situation, drift in the SLAM pose might then predict the pose slightly beside the real object, which might prevent reinitialization of the tracking. To be able to automatically reset the tracking after a certain amount of reinitialization attempts, we introduced the parameters allowedNumberOfFramesSLAMPrediction and allowedNumberOfFramesSLAMPredictionObjectVisible in 2.3 (see also Model Tracker). The second of these parameters limits the number of frames for which purely SLAM pose predictions may keep tracking alive while the SLAM-predicted object location is inside the camera's field of view. In 3.0 the default value of this parameter has been changed from -1 (limit deactivated) to 180. It also has been set to 45 in all HoloLens example scenes.

To deactivate that limit again (keep tracking alive indefinitely long):

allowedNumberOfFramesSLAMPredictionObjectVisible in the parameters section of the tracker.-1 (deactivated).Affects: Tracking configurations created before 2.0.0

We deprecated a number of parameters and behaviors in VisionLib SDK version 2.0. Their future removal was communicated through obsolescence warnings. Some of the obsolete parameters have now been removed with VisionLib SDK version 3.0. The removed parameters are listed in the following, along with their respective replacements:

| Removed parameter name | New Parameter name | Additional adjustments |

|---|---|---|

metaioLineModelURI | lineModelURI | Metaio line models can be rotated 180 degrees around the y-axis to create VisionLib line models |

type: metaio in initPose | no type necessary anymore | To use a metaio init pose as a VisionLib init pose, you have to apply a 180 degree rotation around the y-axis from the right and a 180 degree rotation around the x-axis from the left. |

scheme name with underscore (like project_dir, local_storage_dir, etc.) | Corresponding name with hyphens instead of underscores | |

usePoseFiltering | use poseFilteringSmoothness instead | This parameter defines the smoothness of the pose filter. Lower values will make the filter very smooth. Higher values will make the filter less lagged. Setting the value to 0 turns off the filter. |

showLineModelTracked | use "showLineModel": {"enabled": {"tracked": true}} instead | This option allows you to draw the line-model into the camera image while the objects gets tracked successfully. |

showLineModelTrackedColor | use "showLineModel": {"enabled": {"tracked": true}, "color": {"tracked": [0,255,0]}} instead | This color option can be used to change the color used for drawing the line-model while the objects gets tracked successfully. |

showLineModelCritical | use "showLineModel": {"enabled": {"critical": true}} instead | This option allows you to draw the line-model into the camera image while the tracking is critical. |

showLineModelCriticalColor | use "showLineModel": {"enabled": {"critical": true}, "color": {"critical": [0,255,0]}} instead | This color option can be used to change the color used for drawing the line-model while the tracking is critical. |

showLineModelLost | use "showLineModel": {"enabled": {"lost": true}} instead | This option allows you to draw the line-model into the camera image while the tracked object is lost. |

showLineModelLostColor | use "showLineModel": {"enabled": {"lost": true}, "color": {"lost": [0,255,0]}} instead | This color option can be used to change the color used for drawing the line-model while the tracked object is lost. |

showLineModelTrackedInvalid | use "showLineModel": {"color": "perCorrespondency"} instead | This option allows you to draw the color-coded visualization of the tracking status of each correspondence as a line model in the camera image. |

With Version 3.0.0, the TrackingAnchor component now manages its representation in the backend and requires a one-to-one mapping.

Previously, it was possible to define a single anchor in the tracking configuration file and use multiple TrackingAnchor components (for example, to achieve different smoothing rates) with the name of that anchor.

To achieve the same behavior, you now need to use a single TrackingAnchor but multiple RenderedObject components, each referencing this TrackingAnchor. Since Smooth Time is a property of the RenderedObject, the old behavior can still be achieved.

For more detailed information about the RenderObject component, please consult Using Different Augmentation and Init Pose Guide.